A game to keep up with the news

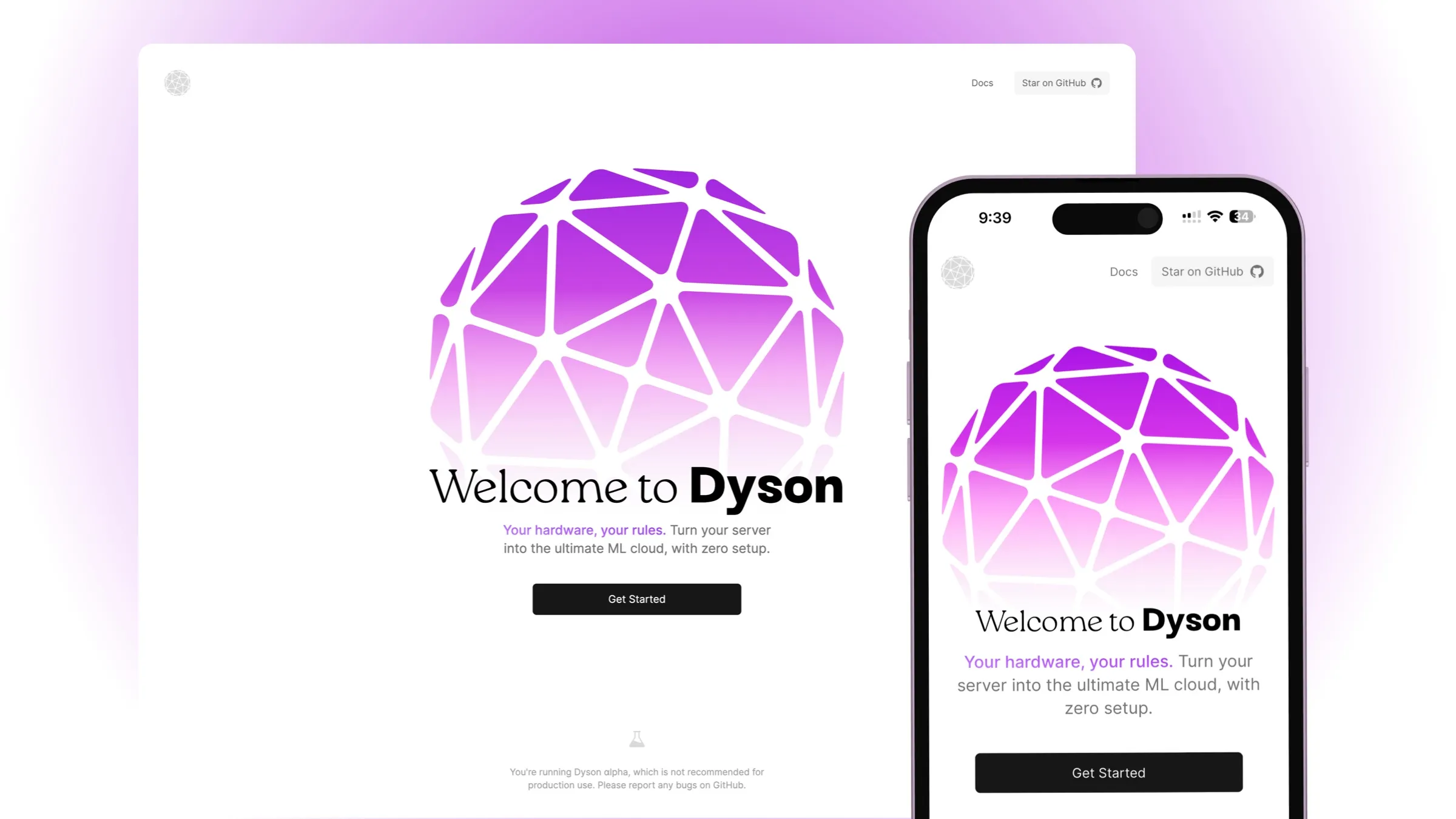

A few days ago, I found a really cool game which turns the news into simple games (trivia & letter unscrambling). Originally a web app with a really nice design, I took it as a challenge to recreate it as a native iOS app. This is the result!

![[1.x] Try to get CSRF token from cookie by m1guelpf · Pull Request #242 · laravel/echo](/_astro/242_1x7NCQ.webp?dpl=dpl_Ar1Bvk8MRL75AreJk3SsCM9jigQF)